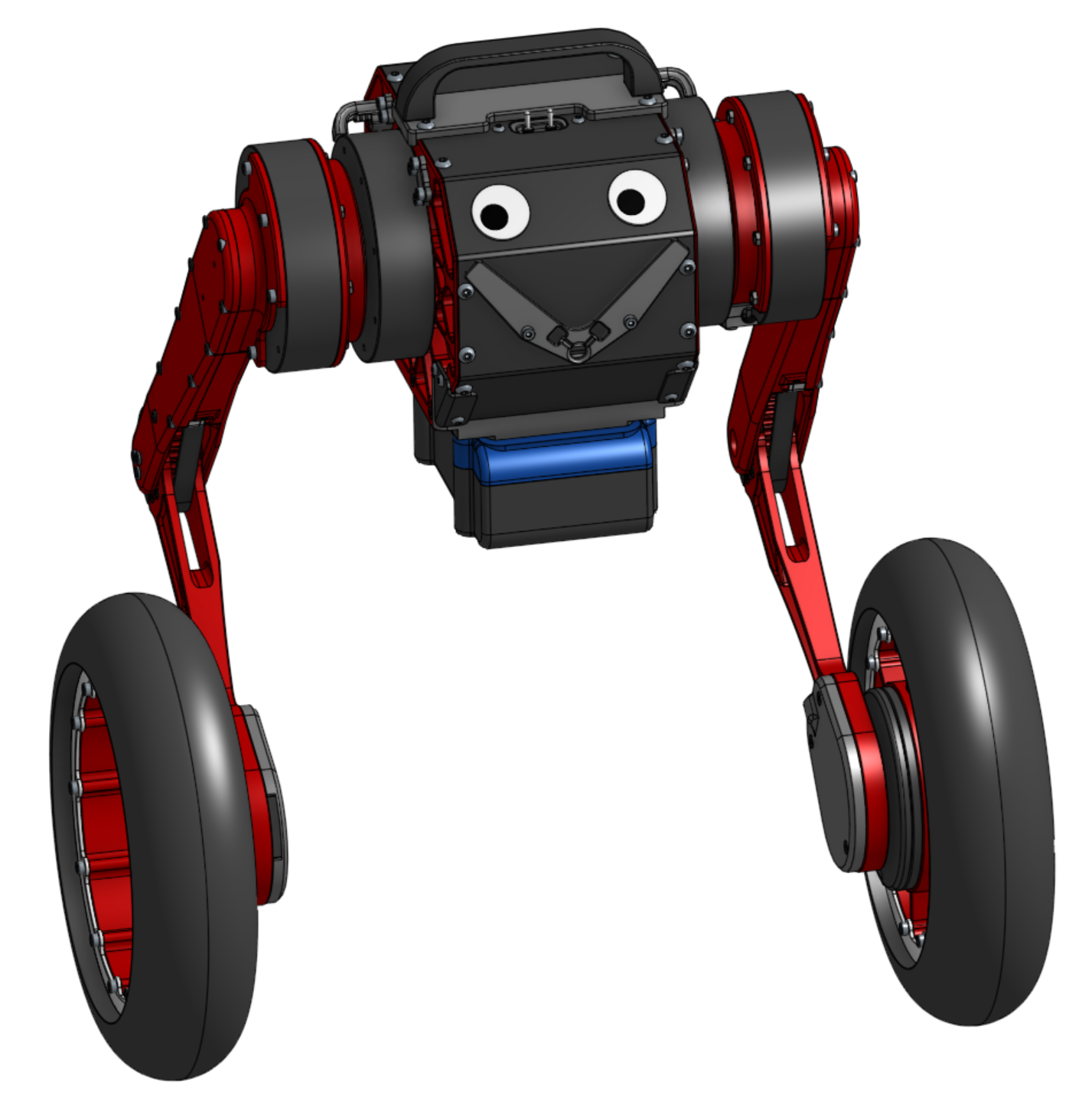

Stride - Wheeled Biped: Mujoco Sim

Up until now, I’ve managed to build and experiment with my robots without relying much on simulators—other than occasionally using Jared’s Mini Cheetah simulator for quadruped work. But as reinforcement learning and imitation learning continue to push the boundaries of robotics, it’s become clear that having access to a reasonably accurate simulator is essential.

I decided to use MuJoCo, partly because I had tinkered with it in the past, and partly because DeepMind provides several Colab notebooks demonstrating how to train control policies for legged robots.

The first step was creating a proper robot model.

On the CAD side, I tend to be meticulous: modeling every bolt, assigning material properties, and overriding masses for printed parts or COTS assemblies. To prepare for MuJoCo, I split the design into the bodies I expected to use. The only real adjustment was making sure each subassembly’s origin was placed at the joint location, and aligning all of the coordinate frames in CAD with MuJoCo’s convention—so everything would drop in cleanly without extra transforms.

From the detailed CAD models, I extracted the physical parameters I needed: total mass, center of mass location, and the inertia diagonal for each link.

I then built simplified geometry to serve as the simulation bodies and collision meshes. I preserved individual part coordinate frames and joint locations so that the kinematics would remain consistent with the CAD. For the legs, I chose to explicitly export both left and right STL meshes rather than relying on mirroring in MuJoCo—avoiding the risk of orientation mix-ups later on.

I then set about writing the MuJoCo XML, using coarse STL exports of the CAD as body meshes. For now, this level of detail is sufficient, though if collision computations become too expensive once I start training policies, I’ll consider substituting simpler primitives. I validated the XML iteratively using simulate.exe, which was invaluable for catching mistakes early.

For actuation, I modeled the hip and knee joints with position servos and the wheels with velocity servos—reasonably close to the real hardware’s 20 kHz PD motor controllers. My first instinct was to match that servo frequency directly and run the simulation at a 20 kHz timestep, but performance was prohibitively slow (not surprising, but worth testing). I ultimately settled on 2 kHz, which still captures the motor dynamics well enough since the real controllers track so tightly. The higher-level control loop runs at 500 Hz in simulation, just like on the physical robot.

For visualization and user input, I leaned on pygame, since I had experience with it from high school projects. I added support for driving the robot with a Logitech F310 gamepad, which made testing quick and intuitive.

Finally, I ported the existing C++ robot controller from hardware into Python. With that in place, the simulated robot could stand up, balance, control its gimbal, adjust body height, and regulate roll—all working smoothly.

At this stage, my primary goal is to move toward developing a general-purpose RL balancing policy, so I don’t plan on porting my jumping or stepping controllers just yet. That said, simulation has been surprisingly fun to work with—so it’s tempting to keep expanding the capabilities.